Documentation section contains information about the resources that make up HPC, as well as details about HPC´s operating system and special packages/software that have been used on HPC resources. This section also focuses on jobs computation on HPC, and also provides information on storage.

All that information will be needed to create and run compute jobs on HPC-Cluster, as well as using NAS storage/space,and Licensed/Open source software that can be used only on HPC resources.

Topics covered in the Documentation section include:

We are using ROCKS 6.0 based on a customized distribution of Community Enterprise Operating System (CentOS). CentOS 6.0 Update 2 is a high-quality Linux

distribution that gives HPC complete control of its open-source software packages and is fully customized to suit HPC research needs, without the need for

license fees.

CentOS is modified for minor bug fixes and desired localized behavior. Many desktop and clustering-related packages were also added to our CentOS installation.

A number of white papers, tutorials, FAQs and other documentation on CentOS can be found on the official CentOS website.

ls: list information about the Filesdu: estimates file space usage.df: report filesystem disk space usage.top: display Linux tasks.ps: report a snapshot of the current processes.tail: outputs the last part of files. (Used when Library linking issues arise. explained in troubleshooting page)ssh[node name]: used to login to the nodes.

Option

-l : list one file per line.

-t : sort by modification time.

-h : print sizes in human readable format.

-a : list hidden files.

Option

-e : all processes.

-f : full.

Option

X : for X forwarding..

All the programs that run under Linux are called as processes. Processes run continuously in Linux and you can kill or suspend different processes

using various commands. When you start a program a new process is created. This process runs within what is called an environment.

This particular environment would be having some characteristics which the program/process may interact with. Every program runs in its own environment.

You can set parameters in this environment so that the running program can find desired values when it runs.

Setting a particular parameter is as simple as typing VARIABLE=value. This would set a parameter by the name

VARIABLE with the value that you provide.

PATH is an environmental variable in Linux and other Unix-like operating systems that tells the shell which directories to search

for executable files (i.e., ready-to-run programs) in response to commands issued by a user. It increases both the convenience and the safety of

such operating systems and is widely considered to be the single most important environmental variable.

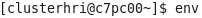

To see a list of the environment variables that are already set on your machine, type the following

This would produce a long list. Linux by default sets many environment variables for you. You can modify the values of most of these variables.

A few of the variables that are set are env | grep PATH.

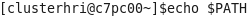

Another way to view the contents of just PATH alone is by using the echo command with $PATH as an argument:

Each user on a system can have a different PATH variable. PATH variables can be changed relatively easily.

They can be changed just for the current login session, or they can be changed permanently (i.e., so that the changes will persist

through future sessions).

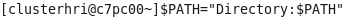

It is a simple matter to add a directory to a user's PATH variable

(and thereby add it to the user's default search path). It can be accomplished for the current session by

using the following command, in which directory is the full path of the directory to be entered:

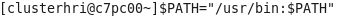

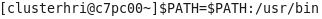

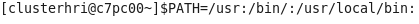

For example, to add the directory /usr/bin, the following would be used:

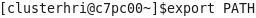

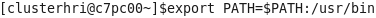

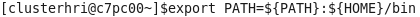

An alternative is to employ the export command, which is used to change aspects of the environment. Thus, the above absolute path could be added with the following two commands in sequence

or its single-line equivalent.

That the directory has been added can be easily confirmed by again using the echo command with $PATH as its argument.

An addition to a user's PATH variable can be made permanent by adding it to that user's .bash_profile file. .bash_profile is

a hidden file in each user's home directory that defines any specific environmental variables and startup programs for that user. Thus,

for example, to add a directory named /usr/test to a user's PATH variable, it should be appended with a text editor to

the line that begins with PATH so that the line reads something like PATH=$PATH:$HOME/bin:/usr/test.

It is important that each absolute path be directly (i.e., with no intervening spaces) preceded by a colon.

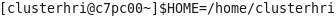

would set the home directory to /home/clusterhri. This is perfect in case your login name is amit and you have been given a directory named /home/amit . In case you don't want this to be your home directory but some other one you could indicate so by typing the new directory name. The HOME directory is always the directory that you are put in when you login.

OR

There are many advantages of using the HOME variable. You can always reach your home directory by only typing cd at the prompt, irrespective of which directory you are presently within. This would immediately transfer you to your HOME directory. Besides in case you write scripts that have $HOME present in them to refer to the current HOME directory, these scripts can be used by other users as well since $HOME in their case would refer to their home directories.

This is a very important environment variable. This sets the path that the shell would be looking at when it has to execute any program. It would search in all the directories that are present in the above line. Remember that entries are separated by a :. You can add any number of directories to this list. The above 3 directories entered is just an example.

Note :The last entry in the PATH command is a . (period). This is an important addition that you could make in case it is not present on your system. The period indicates the current directory in Linux. That means whenever you type a command, Linux would search for that program in all the directories that are in its PATH. Since there is a period in the PATH, Linux would also look in the current directory for program by the name (the directory from where you execute a command). Thus whenever you execute a program which is present in the current directory (maybe some scripts you have written on your own) you don't have to type a ./programname. You can only type programname since the current directory is already in your PATH.

100TB of disk storage using a high-performance parallel file system, which is tuned for both large volume storage and fast access in a

secure computing environment. The system supports large-scale and rapid data analytics.

The disk system is backed by a tape archive and

hierarchical storage management (HSM) system that adds an additional 100TB of storage capacity for long-term data preservation and backup.

You can check available locations from clpc00, cluster1 and master nodes of clusters using df -h.

/scratch6, /c7scratch, /c8scratch and /c9scratch has been set up as

scratch drive for submitting jobs to the respective clusters. /data9,/data10,/data11,

/data12, /data13 are NAS storages of secondary backup.

Please notice that /c5scratch /c5scratch1 /c6scratch & /c6scratch1 and /data3, /data4, /data5, /data6, /data7, /data8 had been removed and

data were copied to /data14.

/c$scratch is mounted in all the compute nodes of their respective Cluster, as well as /data$ is mounted in

all master nodes along with clpc00 and cluster1.

Note: Users are requested to not use /data14 for storage uses as it is being used as backup storage.

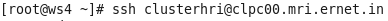

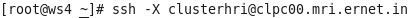

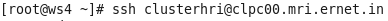

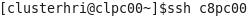

First login to clpc00.

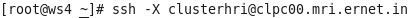

if you need X (GUI : Graphical User Interface) then using.

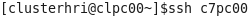

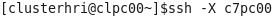

login to c7-cluster c7pc00

if you need GUI then using.

Head node Name of the cluster is c7pc00.clusternet

Head node IP of the cluster is 192.168.1.207

(C7 cluster is a 80 nodes sequential cluster. Naming convention of compute nodes starts from compute-0-0 to compute-0-79.

In which 50 are currently operational).

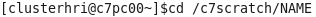

Which area to work in:

For C7-cluster work area is /c7scratch. /c7scratch is mounted on clpc00, cluster1 and

head nodes of all clusters and all the compute nodes of c7-cluster.

If a directory for you does not exist on /c7scratch then you can create one for you.

NAME can be replace by the name of your choice.

Batch Queue System

In this cluster currently only a single queue (default) is specified; users need to submit job to this queue only.

This queue is having 50 nodes configured; each node is having 8 cores cpu and 24GB memory.

Intel Cluster Studio Version 2012.0.032 is installed in this cluster.

Use below command in your .profile or .bash_profile to set path of Intel Cluster Studio permanently.

- source /export/apps/ics_2012.0.032/SetIntelPath.sh intel64 (For 64 Bit code)

- source /export/apps/ics_2012.0.032/SetIntelPath.sh ia32 (For 32 Bit code)

- Users submitting serial jobs need to set below variable in

.profileor.bash_profile file. - export OMP_NUM_THREADS=1

All users are requested to submit jobs through the batch queue. Any user submitting jobs directly on any cluster would invite action against it.

Users are suggested to create one job submission script per job type like below example. (eg. submit.sh)

[clusterhri@c7pc00 ~]$ cat submit.sh

#!/bin/bash

#PBS -l nodes=2:ppn=8 //

#PBS -N job name //

#PBS -e error file //

#PBS -o output file //

mpirun -f $PBS_NODEFILE ?np 16 ./a.out

c7-cluster script file

- To submit the job run below command.

- You can see running or queued job list by running below command.

- Node Status can be seen using

pbsnodescommand as mentioned below. Ifstate=freethen it means node is ready to accept the job. If it is showingstate=job-exclusivethat means node is busy with job. If is showingstate=downorstate=offlineorstate=unkownthat means node is not ready to accept the job due to some problem. - User can

sshrespective node and usetopfor more appropriate output. - Users can see queue status by running below command: (E represents that queue is enable and R represents that queue is running.

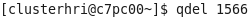

- Users can delete an unwanted job using below command and job id.

OR

OR

If a user face problem in submitting the job he can try to find possible reason using below command.

[clusterhri@c7pc00 ~]$ checkjob 1566

OR

[clusterhri@c7pc00 ~]$ tracejob 1566

OR

[clusterhri@c7pc00 ~]$ qstat -f 1566

Application installed in C7 Cluster

- armadillo-devel, atlas,octave, blas, boost, compat-gcc, pvm, topdrawer, gnuplot_grace

First login to clpc00.

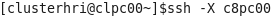

if you need X (GUI : Graphical User Interface) then using.

login to c8-cluster c8pc00

if you need GUI then using.

Head node Name of the cluster is c8pc00.clusternet

Head node IP of the cluster is 192.168.1.208

(C8 cluster is a 48 nodes sequential cluster. Naming convention of compute nodes starts from compute-0-0 to compute-0-47.)

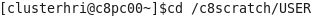

Which area to work in:

For C8-cluster work area is /c8scratch. /c8scratch is mounted on clpc00, cluster1 and

head nodes of all clusters and all the compute nodes of c8-cluster.

If a directory for you does not exist on /c8scratch then you can create one for you.

USER can be replace by the name of your choice.

Batch Queue System

In this cluster currently only a single queue (default) is specified; users need to submit job to this queue only.

This queue is having 48 nodes configured; each node is having 12 cores cpu and 48GB memory.

Intel Cluster Studio Version 2012.0.032 is installed in this cluster.

Use below command in your .profile or .bash_profile to set path of Intel Cluster Studio permanently.

- source /export/apps/ics_2012.0.032/SetIntelPath.sh intel64 (For 64 Bit code)

- source /export/apps/ics_2012.0.032/SetIntelPath.sh ia32 (For 32 Bit code)

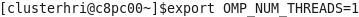

- Users submitting serial jobs need to set below variable in

.profileor.bash_profile file. - export OMP_NUM_THREADS=1

All users are requested to submit jobs through the batch queue. Any user submitting jobs directly on any cluster would invite action against it.

Users are suggested to create one job submission script per job type like below example. (eg. submit.sh)

[clusterhri@c8pc00 ~]$ cat submit.sh

#!/bin/bash

#PBS -l nodes=2:ppn=12 //

#PBS -N job name //

#PBS -e error file //

#PBS -o output file //

mpirun -f $PBS_NODEFILE ?np 16 ./a.out

c8-cluster script file

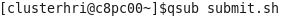

- To submit the job run below command.

- You can see running or queued job list by running below command.

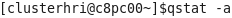

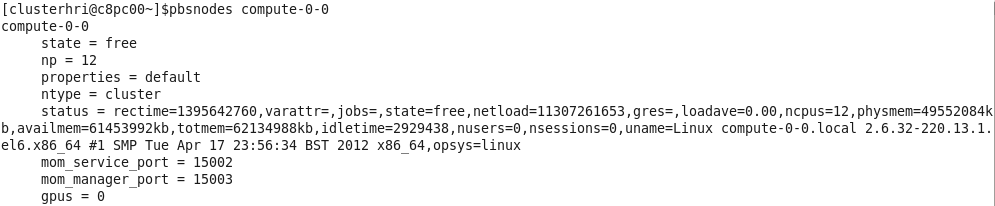

- Node Status can be seen using

pbsnodescommand as mentioned below. Ifstate=freethen it means node is ready to accept the job. If it is showingstate=job-exclusivethat means node is busy with job. If is showingstate=downorstate=offlineorstate=unkownthat means node is not ready to accept the job due to some problem. - User can

sshrespective node and usetopfor more appropriate output. - Users can see queue status by running below command:(E represents that queue is enable and R represents that queue is running)

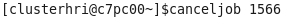

- Users can delete an unwanted job using below command and job id.

[clusterhri@c8pc00 ~]$ ssh compute-0-0

[clusterhri@c8pc00 ~]$ qdel 1343

OR

[clusterhri@c8pc00 ~]$ canceljob 1343

If a user face problem in submitting the job he can try to find possible reason using below command.

[clusterhri@c8pc00 ~]$ checkjob 1343

OR

[clusterhri@c8pc00 ~]$ tracejob 1343

OR

[clusterhri@c8pc00 ~]$ qstat -f 1343

Application installed in C8 Cluster

- gsl, atlas, octave , blas ,boost ,compat-gcc, pvm, topdrawer, gnuplot_grace, NAG

Using the c9-cluster

First login to clpc00.

login to c9pc00

Head node Name of the cluster is c9pc00.clusternet

Head node IP of the cluster is 192.168.1.209

(C9-cluster is a 48 nodes parallel cluster. Naming convention of compute nodes starts from compute-0-0 to compute-0-47.

Note: Submitting serial job in C9 is deprecated.

Which area to work in:

For C9-cluster work area is /c9scratch. /c9scratch is mounted on clpc00, cluster1 and

head nodes of all clusters and all the compute nodes of c9-cluster.

If a directory for you does not exist on /c9scratch then you can create one for you.

[clusterhri@c9pc00 ~]$ cd /c9scratch/NAME/

NAME can be replace by the name of your choice.

Batch Queue System

In this cluster currently only a single queue (default) is specified; users need to submit job to this queue only.

This queue is having 48 nodes configured; each node is having 16 cores and 128GB memory.

Intel Cluster Studio Version 2013 is installed in this cluster.

MPI PATHS:

IntelInstalled Path:/opt/intel/impi/4.1.0.024/bin64

Library Path :/opt/intel/impi/4.1.0.024/lib64

icc : /opt/intel/bin/icc

ifort : /opt/intel/bin/ifort

Openmpi

Installed Path : /opt/openmpi-1.6

Libarary Path : /opt/openmpi-1.6/lib

mpicc : /opt/openmpi-1.6/bin/mpicc

mpicxx : /opt/openmpi-1.6/bin/mpicxx

mpif77 : /opt/openmpi-1.6/bin/mpif77

mpif90 : /opt/openmpi-1.6/bin/mpif90

Users are suggested to create one job submission script per job type like below example. (eg. submit.sh)

[clusterhri@c9pc00 ~]$ cat submit.sh

#!/bin/bash

#PBS -l nodes=2:ppn=16

#PBS -N job_name

#PBS -e error_file

#PBS -o output_file

mpirun -f $PBS_NODEFILE ?np 32 ./a.out

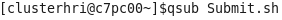

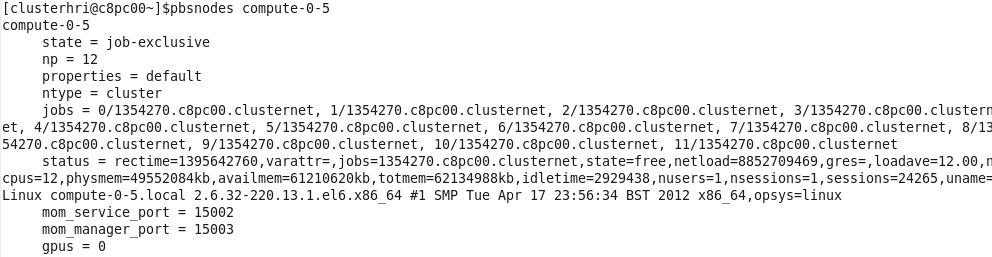

To submit the job run below command.

[clusterhri@c9pc00 ~]$ qsub Submit.sh

You can see running or queued job list by running below command.

[clusterhri@c9pc00 ~]$ qstat ?a

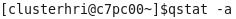

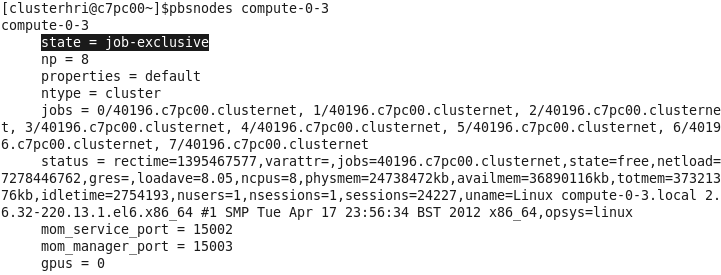

Node Status can be seen using pbsnodes command as mentioned below. If state=free then it means node is ready to accept the job. If it is showing state=job-exclusive that means node is busy with job. If is showing state=down or state=offline or state=unkown that means node is not ready to accept the job due to some problem.

[clusterhri@c9pc00 ~]$ pbsnodes compute-0-0

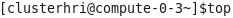

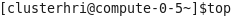

User can ssh respective node and use top for more appropriate output.

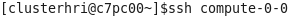

[clusterhri@c9pc00 ~]$ ssh compute-0-0

[anura@compute-0-0 ~]$ top

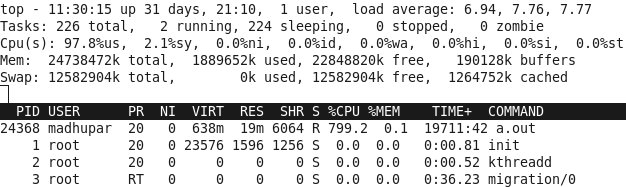

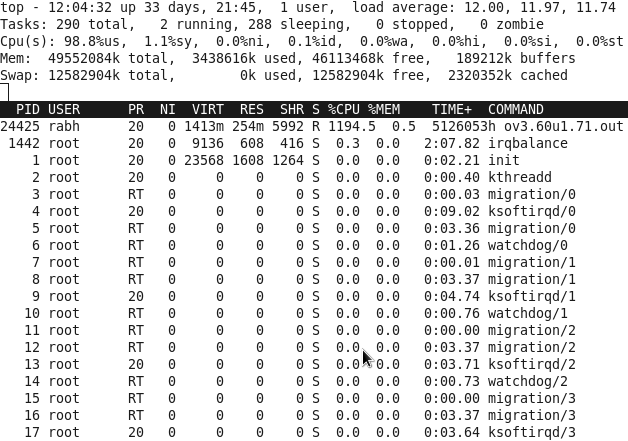

Users can see queue status by running below command: (E represents that queue is enable and R represents that queue is running)

[clusterhri@c9pc00 ~]$ qstat -q

Users can delete an unwanted job using below command and job id.

[clusterhri@c9pc00 ~]$ qdel 1253

OR

[clusterhri@c9pc00 ~]$ canceljob 1253

If a user face problem in submitting the job he can try to find possible reason using below command.

[clusterhri@c9pc00 ~]$ checkjob 1253

OR

[clusterhri@c9pc00 ~]$ tracejob 1253

OR

[clusterhri@c9pc00 ~]$ qstat -f 1253

Useful Commands for C13-Cluster

Users can see queue status by running below command: (E represents that queue is enable and R represents that queue is running)

[clusterhri@c13pc00 ~]$ qstat -a

Users can see their jobs running on which node status by running below command

[clusterhri@c13pc00 ~]$ qstat -n1

Users can see Node Status (free / busy) by running below command

[clusterhri@c13pc00 ~]$ pbsnodes -avS