Home » Mini-Guide for New HPC Cluster User

Mini-Guide for New User:

The information given below is to get you started in an impression that you have never

used a Cluster, examples below would be helpful to become familiar with HPC. It is a common practice by users to simply try to run jobs without understanding what

they are doing. Such actions may impact the running/critical jobs on Cluster.

Please read & understand the steps below & for wider elaboration follow

Documentation.

- You can connect to any cluster through Cluster gateway server clpc00.hri.res.in (192.168.3.215)

- clpc00 (192.168.3.215) is the gateway to enter from Campus Network from the HRI LAN to HPC Network. After successful login user is supposed to land in clpc00, from there users can access Clusters according to his/her requirement. For serial jobs C8, C10, C11 Clusters only, apparently for parallel shared memory jobs C9, C11, C12 & C13 Clusters are recommended.

- You can change your user/login password using the command yppasswd from clpc00.

- The home area does not have a quota system at present. Users should refrain from using it to store nontrivial or large data files. This will reduce performance, any job that causes heavy load on our NFS server will be terminated without advance notice.

- Installation of software/packages is deprecated in

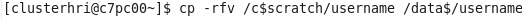

/home area, rather use/c$scratch/username -

After completion of jobs, remove/copy your “Important DATA“ from

/c$scratchto/data$boxes. This practice respite/c$scratchboxes in storage and load. - If you generate data related to your research work. It is User’s responsibility to BACK IT UP SOMEWHERE ELSE OTHER THAN HRI HPC STORAGE. HRI HPC will not be responsible in any case of severe crash/failure in Storage servers or lose of DATA.

- An alternate gateway cluster1.hri.res.in (192.168.3.228) is also available. You can ssh for remote access/login to the same.

Cluster1 is recommended for copying data among scratch to data boxes.

Cluster1 can also be used for copying (scp) data to/from HRI Firewall/Gateway to/from HPC scratch/data boxes. - You can also connect to any cluster through cluster1.hri.res.in (192.168.3.228)

cchrichandan@ipc60:~$ ssh -X username@clpc00.hri.res.in Replace “username” with your assigned login id.

For more detail refer to Documentation. Replace “clustername“ with your preferred Cluster.

i.e. c8pc00, c9pc00, c10pc00, c11pc00, c12pc00, c13pc00.

Users Should NOT require direct login on any Compute Nodes other than the master node (batch queue server) for most requirements.

chandan@clpc00 ~]$ passwd

The change took some time to be effective, please send an e-mail to

us if it

takes more than 10 minutes.

User Can check their home area usage space. [chandan@clpc00~]$du -sh /home/chandan/

[chandan@mn1 ~]$ cd /c11scratch/chandan/

Note: Users are required not to try access machine other than Clusters inside HPC Network, users found guilty may attract most severe action against themselves.